Introduction

Capture the Flag (CTF) contests are a staple of security conferences and

BSides Las Vegas is no exception. However the

Pros vs Joes (PvJ) CTF I help support there is a bit unique. Not only is it a blue vs blue CTF with red aggressor and gray user teams, but the game dynamics are a fundamental development point for the CTF team. (There's a lot more to it such as it's educational goal or that we allow blue teams to attack each other on the second day. You can read more about it at

http://prosversusjoes.net/.)

Game Dynamics

When we say 'game dynamics', we mean a couple of things. First we mean what's scored and how much. In our case that is currently four things:

- hosts (score given to teams for maintaining service availability)

- beacons (score deducted when the red team signals a host is compromised)

- flags (score deducted when the red team breaches specific files)

- tickets (score deducted when the gray team is not being appropriately supported)

At a more fundamental level though, we mean the scenario the CTF is meant to represent. As a blue team CTF, we try and simulate the real world. As such, starting last year, we began to transition our game model to simulate an economy. Score is not granted so much as transferred. For example, the gold team pays the gray team for accomplishing some task, then the gray team pays a portion of that score to the blue team for maintaining the services necessary to accomplish that task. Alternately, when the red team (or another blue team) installs a beacon, the score isn't lost, but instead transferred to the team that placed the beacon.

Beginning with last year, we have started to then simulate the way we expect the game to run. This year we have also captured detailed scoring logs. This blog is about our analysis of the score from this year's game and how it helps us plan for the future.

Simulation

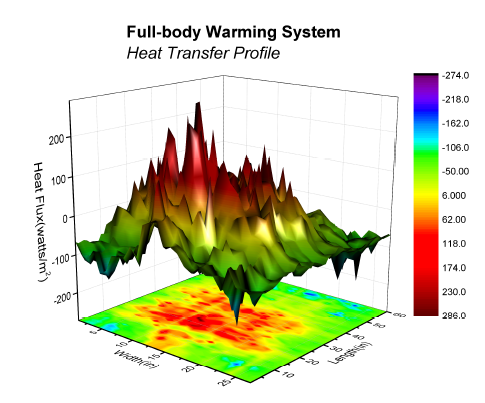

The first thing we do is create a game narrative and scoring profile for the game. The profile is the servers that will come online, go offline, and how much they will be scored per (5 minute) round. It is picked to produce specific outcomes such as inflation (to decrease point value early in the game when teams are just getting going and to allow dynamism throughout the game).

We then try and build distributions of how likely servers will be to go offline, how likely beacons will be and how long they will last, and how many flags will be found. This year we used previous years simulations and logs as well as expert opinion to build the distributions. The distributions we used are below:

### Define distributions to sample from

## Based on previous games/simulations and expert opinion

# H&W outage distributions

doutage_count <- distr::Norm(mean=8, sd = 8/3)

doutage_length <- distr::Norm(mean=1, sd = 1/3)

# flag distributions

dflags <- distr::Norm(mean=2, sd= 2/3) # model 0 to 4 flags lost with an average of 2

# beacon distributions

gamma_shapes <- rriskDistributions::get.gamma.par(p=c(0.5, 0.7), c(0.75, 4)) # create a gamma distribution to draw number of tickets from

dbeacons_length <- distr::Gammad(shape=gamma_shapes['shape'], scale=1/gamma_shapes['rate']) # in hours

dbeacon_count <- distr::Norm((4-3)/2+3, (4-3)/3)

Based on this we ran Monte Carlo simulations to try and predict the outcome of the game.

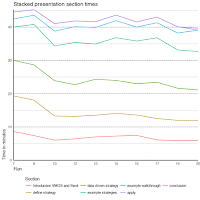

First, we analyzed the expected overall score.

Next we wanted to look at the components of the score.

Finally we wanted to look at the distributions of potential final scores and the contributions from the individual scoring types

The Game

And then we run the game.

The short answer is, it's VERY different. We had technical issues that prevented starting the game on time. We were not able to complete some development that prevented automatic platform deployment, some hosts were not available, and some user simulation was also not available. This is not a critique of the development team who did a crazy-awesome job both rebuilding the infrastructure for this game in the months leading up to it as well as dynamically deploying hosts during the game. It's just reality. The scoring profile was built for everything we want. I am pleased with how much of it we got on game day.

The Scoreboard

|

| The Final Scoreboard |

You can find the final scoreboard and scores

here. It gives you an idea of what the game looked like at the end of the game, but doesn't tell you a lot about how we got there. I'm personally more interested in the journey than the destination so that I can support improving the game narrative and scoring profile for the next game.

Scores Over Time

The first question is how did the scores progress over time? (You'll have to forgive the timestamps as they are still in UTC I believe.) What we hoped for was relatively slow scoring the first two hours of the game. This allows teams the opportunity to make up ground later. We also do not want teams to follow a smooth line or curve. A smooth line or curve would mean very little was happening. Sudden jumps up and down, peaks and valleys, mean the game is dynamic.

What we see is a relatively slow beginning game. This is due to beacons initially being scored below the scoring profile and one of three highly-scored puzzle servers being mistakenly scored lower from it's start late in day 1 until it was corrected at the beginning of day 2.

We do see an amount of trading back and forth. ForkBomb (as an aside, I know they wanted the _actual_ fork bomb code for their name, but for this analysis text is easier) takes an early lead while Knights suffer some substantial losses (relative to the current score). Day two scores take off. The teams are relatively together through the first half of day 2, however, Arcanum takes off mid-day and doesn't look back.

The biggest difference is that when teams started to have several beacons, as part of their remediation they tended to suffer self-inflicted downtime. This caused a compound loss of score (the loss of the host scoring they would have had plus the cost of the beacons). We did not account for this duplication in our modeling, but plan to in the future.

Ultimately I take this to mean scoring worked as we wanted it to. The game was competitive throughout and the teams that performed were rewarded for it.

It does leave the question of what contributed to the score...

Individual Score Contributions

What we expect is relatively linearly increasing host contributions with a bit of an uptick late in the game and linearly decreasing beacon contributions. We also expect a few significant, discrete losses to flags.

What we find is roughly what we expected but not quite. The rate of host contribution on day two is more profound than expected for both Paisley and Arcanum suggesting the second day services may have been scored slightly high.

Also, no flags were captured. However, we do have tickets which were used by the gold team to incentivize the blue teams to meet the needs of the gray team.

The biggest difference is in beacons. We see several interesting things. First, for a period on day two, Knights employed a novel (if ultimately overruled) method for preventing beacons. We see that in the level beacon score for an hour or two. We also see a shorter level score in beacons later on when the red team employed another novel (if ultimately overruled) method that was significant enough that had to be rolled back. We also see how Arcanum benefited heavily from the day 2 rule allowing blue-on-blue aggression. Their beacon contribution actually goes UP (meaning they were gaining more score from beacons than they were losing) for a while. On the other side, Paisley suffers heavily from blue-on-blue aggression with significant beacon losses.

Ultimately this is good. We want players

_playing_, especially on day 2. Next year we will try to better model the blue-on-blue action as well as find ways to incentivize flags and provided a more substantive and direct way for the gray team to motivate the blue team.

Before we move on, two final figures to look at. The first lets us see individual scoring events per team and over time. The second shows us the sum of beacon scores during each round. It gives an idea of the rate of change of score due to beacons and provides an interesting comparison between teams.

But there's more to consider such as the contributions of individual hosts and Beacons to score.

Hosts

The first thing we want to look at is how the individual servers influenced the scores. What we want to see is starting servers contributing relatively little by the late game, desktops contributing less, and puzzle servers contributing substantially once initiated. This is ultimately what we do see. (This was the analysis, done at the end of day 1, that allowed us to notice puzzle-3 scoring substantially lower than it should. We can see it's uptick on day 2 as we correct it's scoring.)

It's also useful to look at the score of each server relative to the other teams. Here it is much easier to notice the absence of the Drupal server (removed due to technical issues with it). We also notice some odd scoring for puzzle servers 13 and 15, however the contributions are minimal.

More interesting are the differences in scoring for servers such as Redis, Gitlab, and Puzzle-1. This suggests maybe these servers are harder to defend as they provided score differentiation. Also, we notice teams strategically disabling their domain controller. This suggests the domain controller should be worth more to disinsentivize this approach.

Finally, for the purpose of modeling, we'd like to understand downtime. It looks like most servers are up 75% to near 100% of the time. We can also look at the distributions per team. We will use the distribution of these points to help inform our simulations for the next game we play. We are actually lucky to have a range of distributions per team to use for modeling.

Beacons

For the purpose of this analysis, we consider a beacon new if it misses two scoring rounds (is not scored for 10 minutes).

First it's nice to look at the beacons over time. (Note that beacons are restarted between day 1 and day 2 during analysis. This doesn't affect scoring.) I like this visualization as it really helps show both the volume and the length of beacons and how they varied by team. You can also clearly see the breaks in beacons on day two that are discussed above.

The beacon data is especially helpful for building distributions for future games. First we want to know how many beacons each team had:

Day 1:

- Arcanum - 17

- ForkBomb - 24

- Knights - 18

- Paisley - 21

Day 2:

- Arcanum - 13

- ForkBomb - 17

- Knights - 29

- Paisley - 34

We also want to know how long the beacons last. The aggregate distribution isn't particular useful. However the distributions broken out by teams are interesting. They show substantial differences between teams. Arcanum had few beacons, but they lasted a long time. Paisley had very few long beacons (possibly due to self-inflicted downtime). Rather than be a power law distribution, the beacons are actually relatively even with specific peaks. (This is very different from what we simulated.)

Conclusion

In conclusion, the take-away is certainly not how any given team did. As the movie "Any Given Sunday" implied, sometimes you win, sometimes you lose. What is truly interesting is both our ability to attempt to predict how the game will go as well as our ability to then review afterwards what actually happened in the game.

Hopefully if this blog communicates anything, it's that the scoreboard at the end simply doesn't tell the whole story and that there's still a lot to learn!

Future Work

This blog is about scoring from the 2018 BSides Las Vegas PvJ CTF so doesn't go into much detail about the game itself. There's a lot to learn on the

PvJ website. we are also in the process of streamlining the game while making the game more dynamic. As mentioned above, the process started in 2017 and will continue for at least another year or two. Last year we added a store so teams can spend their score. We also started treating score as a currency rather than a counter.

This year we added additional servers coming on and off line at various times as well as began the process of updating the gray team's role by allowing them to play a puzzle challenge hosted on the blue team servers.

In the next few years we will refine score flow, update the gray team's ability to seek compensation from the gray team for poor performance, and additional methods to maximize blue team's flexibility in play while minimizing their requirements. Look forward to future posts as we get the details ironed out!